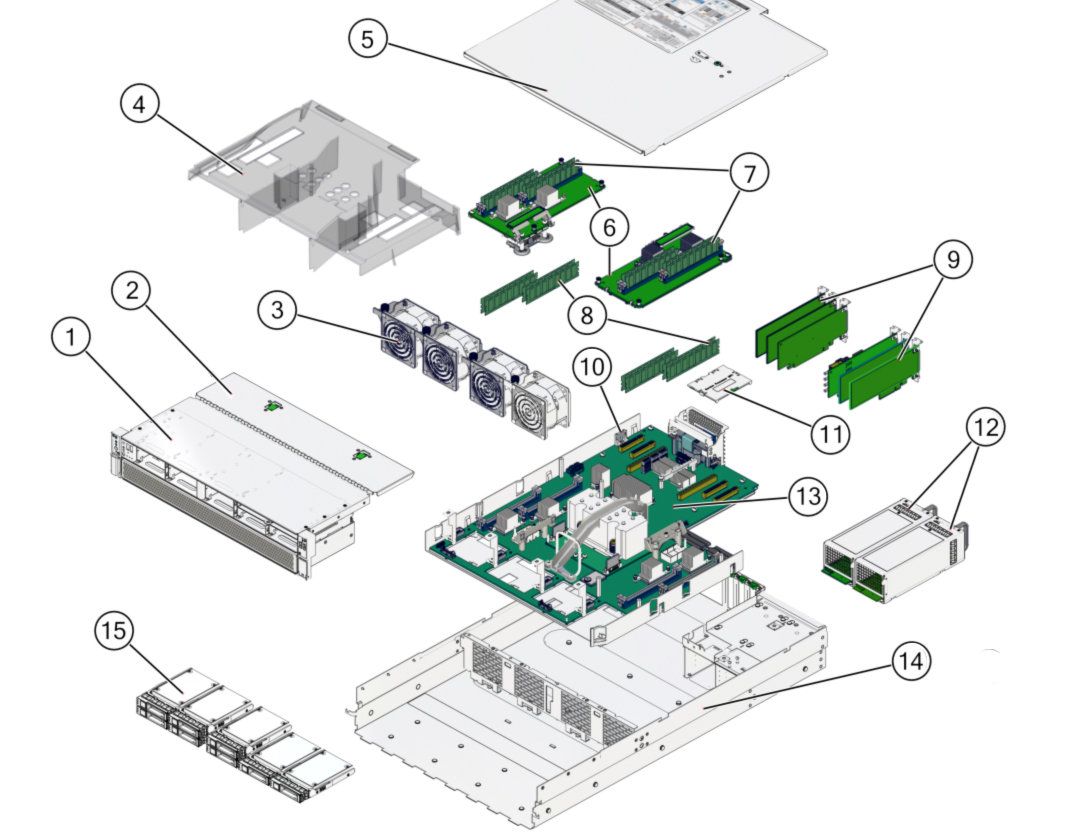

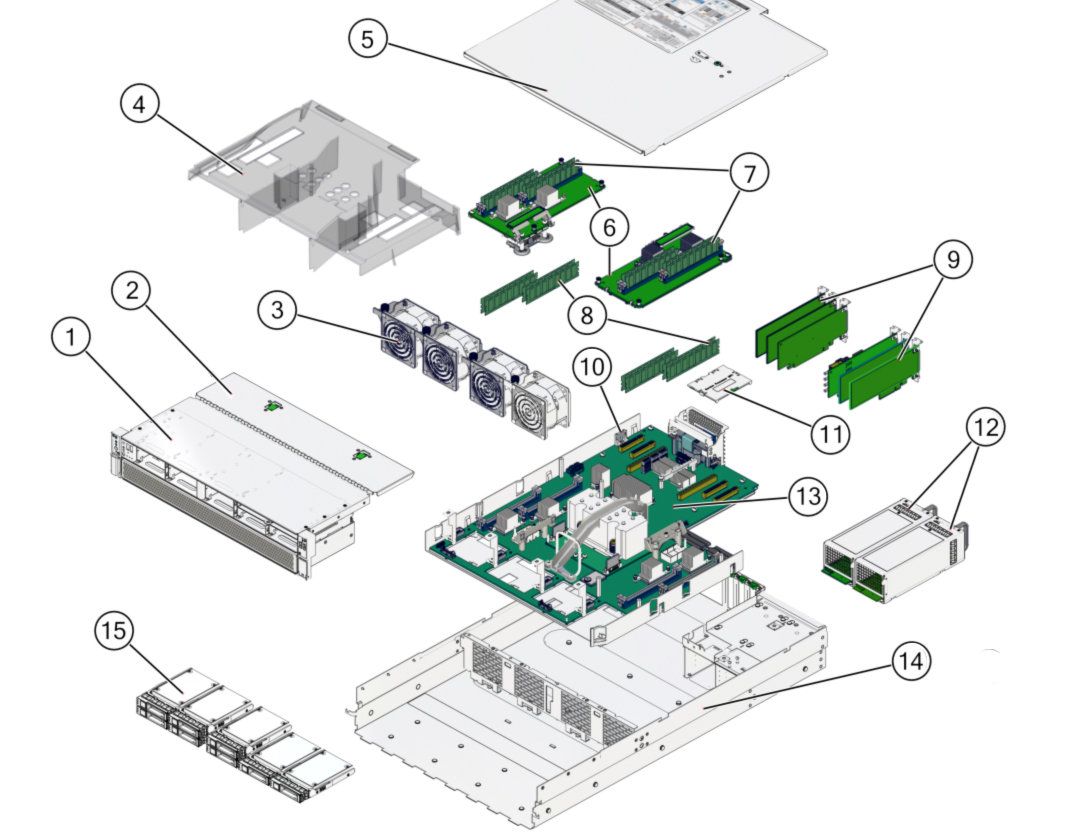

Internal Component Locations

The following figure identifies the replaceable component locations with the top cover removed.

Note - The two memory risers are optional.

|

|

|

|

|

|

1

|

Drive cage (drive backplane on the rear of the drive cage)

|

/SYS/DBP

|

|

|

2

|

Fan module cover

|

|

|

|

3

|

Fan modules

|

As viewed from front of server:

/SYS/MB/FM0 (left)

/SYS/MB/FM1 (left center)

/SYS/MB/FM2 (right center)

/SYS/MB/FM3 (right)

|

|

|

4

|

Airflow cover

|

|

|

|

5

|

Top cover

|

|

|

|

6

|

Memory risers

|

/SYS/MB/CM/CMP/MR0

/SYS/MB/CM/CMP/MR1

|

|

|

7

|

DIMMs on memory risers

|

/SYS/MB/CM/CMP/MR[0-1]/BOB[0-3]1/CH[0-1]/DIMM

|

|

|

8

|

DIMMs on motherboard

|

/SYS/MB/CM/CMP/BOB[0-3]0/CH[0-1]/DIMM

|

|

|

9

|

PCIe cards

|

/SYS/MB/PCIE1

/SYS/MB/PCIE2

/SYS/MB/PCIE3

/SYS/MB/PCIE4

/SYS/MB/PCIE5

/SYS/MB/PCIE6

|

|

|

10

|

Battery

|

/SYS/MB/BAT

|

|

|

11

|

SPM

|

/SYS/MB/SPM

|

|

|

12

|

Power supplies

|

/SYS/PS0 (outer)

/SYS/PS1 (inner)

|

|

|

13

|

Motherboard

|

/SYS/MB

|

|

|

14

|

Chassis

|

|

|

|

15

|

Drives

|

/SYS/DBP/HDD0 (lower left)

/SYS/DBP/HDD1

/SYS/DBP/HDD2 or /SYS/DBP/NVME0

/SYS/DBP/HDD3 or /SYS/DBP/NVME1

/SYS/DBP/HDD4 or /SYS/DBP/NVME2

/SYS/DBP/HDD5 or /SYS/DBP/NVME3

/SYS/DBP/HDD6

/SYS/DBP/HDD7 (right)

|

|

|

|

Processor chip (replaceable only by replacing the motherboard)

|

/SYS/MB/CM/CMP

|

|

|

|

eUSB drive

|

/SYS/MB/EUSB_DISK

|

|